- (https://b-ark.ca/ksKKwg)

I’m riding in the 2025 Enbridge Tour Alberta for Cancer, raising money for the Alberta Cancer Foundation, and have so far raised $2,744, exceeding my $2,500 goal and surpassing my 2024 effort!

Help me by donating here

And remember, by donating you earn a chance to win a pair of hand knitted socks!

Ubuntu on the 2017 Lenovo X1 Carbon

I’ve long been an enormous fan of Lenovo equipment, and last year I decided to finally upgrade my aging T410 and get the truly fantastic 2017 revision of the X1 Carbon. A year on it is unquestionably one of the finest pieces of equipment I’ve ever owned.

The laptop naturally shipped with Windows 10, and as an operating system I have few issues with it. My work involves a lot of Microsoft-specific software, including Outlook and Skype for Business, so it’s a good fit for when I want to work from home and not transport my work equipment from the office. But as a development environment it’s only decent. WSL certainly makes the experience a fair bit more enjoyable, but it stills feels a little clunky.

Meanwhile, there’s definitely a few things that irritate, the most notable being the unplanned reboots, for which Windows 10 has become legendary.

Of course, Windows on Intel hardware does have its advantages. Chipsets and peripherals Just Work, and Windows has gotten much better with touchpad support and so forth. So, in the end, I don’t have a lot of complaints. Ultimately, completely leaving Windows behind on this machine just isn’t tenable given my use cases.

But, that doesn’t mean I can’t try Linux out, and so I did with Ubuntu 18.04!

This is just a quick write-up of the installation process and some of the issues I’ve encountered, in case this has any utility for anyone.

First off, honestly, the install process was dead simple. I was a little worried about the UEFI bootloader and so forth, but ultimately I just had to:

- Disable Fast Boot in Windows

- Disable Secure Boot in the BIOS

After that, I carved out some space on the disk from the existing NTFS partition and then installed from a USB key I wrote using Rufus based on the Ubuntu 18.04 ISO.

So far, pretty bog standard stuff, and certainly a far cry from the old days of installing Slackware from a dozen floppies!

The OS, itself, works so well as to trick one into thinking all was going to be perfectly fine and everything was simply going to work out of the box!

Not so.

In particular, using the 4.15 kernel that ships with Ubuntu, I ran into a rather bizarre error where the Thunderbolt USB controller would be “assumed dead” by the kernel (Linux’s words). This resulted in attempts to enumerate the PCI devices failing, and that then lead to various and mysterious application failures if they happened to use libpci. I can only assume the USB ports were also non-functional, but I never actually checked that.

This lead me down an extensive troubleshooting path that was only resolved when I decided to downgrade my kernel.

Yes, that’s right. Downgrade.

Moving to 4.14 resolved the issue, which means clearly a bug has been introduced in 4.15 (and before you ask, yes I tested 4.17, and no the issue isn’t fixed… alas).

Oh, but there’s more!

Sleep on lid closed worked wonderfully, except that upon waking, two finger scrolling broke.

Weird.

Fortunately, there’s a workaround: Add “psmouse.synaptics_intertouch=0” to your Linux kernel boot arguments.

With those two workarounds, this thing actually seems to be working pretty well (as evidenced by the fact that I’m writing this post on Ubuntu on my laptop). Wifi clearly works, as does Bluetooth, sound, and accelerated video. USB devices appear to work (though I’ve only just started testing). Plugging in an external HDMI TV works flawlessly. Suspend works perfectly (though I’ve still not gotten hibernate working).

In short, so far the essentials are functional.

Meanwhile, amazingly, battery life seems to be as good as Windows; something that I certainly couldn’t claim in the past. And that’s without any kind of tweaking.

Update: I installed TLP plus the associated Thinkpad kernel module and when just doing light browsing or typing, on a full charge, Ubuntu reports an astonishing fourteen hours of battery life. Windows has never come close!

In short, while this setup is clearly still not for amateurs (having to downgrade kernels and fiddle with boot arguments to get basic functionality working is not for the feint of heart), it does seem to work well once those issues are overcome.

The real question is one of long-term stability (and utility). Only time will tell!

Update:

Windows assumes the hardware clock is in local time. Ubuntu defaults to assuming the hardware clock is in UTC (which is almost certainly the right thing to do).

This means that switching between the two results in the clock getting set incorrectly!

The simplest solution is to make Ubuntu match Windows’ braindead setting as follows:

timedatectl set-local-rtc 1 --adjust-system-clockConfirm by just running timedatectl without any arguments, which should result in this warning:

Warning: The system is configured to read the RTC time in the local time zone. This mode can not be fully supported. It will create various problems with time zone changes and daylight saving time adjustments. The RTC time is never updated, it relies on external facilities to maintain it. If at all possible, use RTC in UTC by calling 'timedatectl set-local-rtc 0'.Homegrown Backups

I mentioned a while back that I’d moved to using my NUC as a backup storage device, and that continues to be a core use case after I repaved and moved the thing back over to Ubuntu.

Fortunately, as a file server, Linux is definitely more capable and compatible than macOS (which is why, back when it was a Hackintosh, I used a Linux VM as the SMB implementation on my LAN), and so I’ve already got backups re-enabled and working beautifully.

But the next step is enabling offsite copies.

Previously, I achieved this with Google Drive for macOS, backing up the backup directory to the cloud, a solution which worked pretty well overall! Unfortunately, Google provides no client for Linux, which left me in a bit of a jam.

Until I discovered the magic that is rclone.

rclone is, plain and simply, a command-line interface to cloud storage platforms. And it’s an incredibly capable one! It supports one-way folder synchronization (it doesn’t support two-way, but fortunately I don’t need that capability), which means that it’s the perfect solution for syncing up a local backup folder to an offsite cloud stored backup.

But wait, there’s more!

rclone also supports encryption. And that means that (assuming I don’t lose the keys… they’re safely stored in my keepass database (which, itself, is cloned in multiple locations using my other favourite tool, Syncthing)) I can protect those offsite backups from prying eyes, something which Google’s Drive sync tool does not offer.

I can also decide when I want the synchronization to occur! I don’t need offsites done daily. Weekly would be sufficient, and that’s a simple crontab entry away.

Now, to be clear, rclone would have worked just as well on the Hackintosh, so if you’re a Mac user who’d like to take advantage of rclone’s capabilities, you can absolutely do so! But for this Linux user, it was a pleasant surprise!

Hackintosh Retrospective

Well, it’s finally happened. After a year of running semi-successfully, I’ve finally decided the trouble wasn’t worth the effort and it was time to retire the Hackintosh.

As a project it was certainly a lot of fun, and macOS definitely has its attraction. In the end, though, I found my NUC was serving a few core functions for which macOS wasn’t uniquely or especially suited:

- Torrent download server

- Storage target for laptop backups

- Media playback of local content as well as Netflix

Of course, the original plan was to also use the machine as a recording workstation, but so far it hasn’t worked out that way. Yet, anyway.

Prior to a recent security update, there was a couple of issues that I generally worked around:

- Onboard bluetooth and the SD card reader don’t work.

- The onboard Ethernet adapter stopped working after heavily utilization.

- Built in macOS SMB support is broken when used as a target for Windows file-based backups.

These issues were resolved, by:

- Avoiding unsupported hardware.

- Using an external ethernet dongle.

- Performing all SMB file serving via a Linux VM running on VirtualBox.

Meanwhile, the threat of an OS update breaking the system always weighed on me.

Unfortunately, it didn’t weigh on me enough: the 2018-001 macOS security update broke things pretty profoundly, as the Lilu kernel extension started crashing the system on boot.

A bit of research lead me to updating Lilu, plus a couple of related kexts while I was at it, which brought the system back to a basically functioning state, except now:

- HDMI audio no longer worked.

- The USB Ethernet dongle stopped working.

The first issue rendered the machine unusable as a video playback device, a use case which is surprisingly common (my office is a very cozy place to watch Star Trek or MST3K!).

The latter left me with a flaky file/torrent server.

In short, all the major use cases I had for the Hackintosh no longer worked reliably, or at all.

Meanwhile, nothing I was doing uniquely relies on macOS. I’ll probably never get into iOS development, and the only piece of software I’d love to have access to is Omnigraffle (I never got far enough into recording tools to get attached to them).

So, no major benefits, and a whole lot of pain meant, if I’m being pragmatic, the Hackintosh was no longer serving a useful function.

So what did I replace it with?

Ubuntu 18.04, of course!

So far it’s been a very nice experience, with the exception of systemd-resolved, which makes me want to weep silently (it was refusing to resolve local LAN domain names for reasons I never figured out). Fortunately, that was easily worked around, and I’m now typing this on a stable, capable, compatible Linux server/desktop.

When I do finally get back to recording, I’ll install a low latency kernel, jack, and Ardour, and then move on with my life!

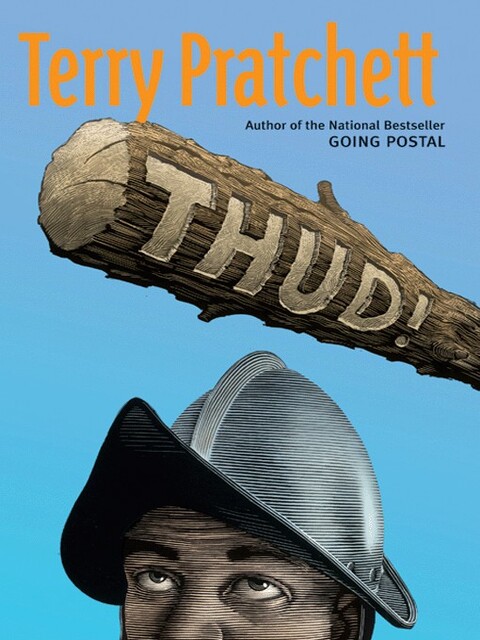

Review: Thud!

(https://b-ark.ca/I6M8yw)Review of Thud!

<span>(<a href="https://en.wikipedia.org/wiki/Discworld">Discworld</a> #34.0)</span>by

(9780060815318)★★★★ </span>

Once, in a gods-forsaken hellhole called Koom Valley, trolls and dwarfs met in bloody combat. Centuries later, each species still views the other with simmering animosity. Lately, the influential dwarf, Grag Hamcrusher, has been fomenting unrest among Ankh-Morpork's more diminutive citizens—a volatile situation made far worse when the pint-size provocateur is discovered bashed to death . . . with a troll club lying conveniently nearby.

Commander Sam Vimes of the City Watch is aware of the importance of solving the Hamcrusher homicide without delay. (Vimes's second most-pressing responsibility, in fact, next to always being home at six p.m. sharp to read Where's My Cow? to Sam, Jr.) But more than one corpse is waiting for Vimes in the eerie, summoning darkness of a labyrinthine mine network being secretly excavated beneath Ankh-Morpork's streets. And the deadly puzzle is pulling him deep into the muck and mire of superstition, hatred, and fear—and perhaps all the way to Koom Valley itself.

“What kind of human creates his own policeman?”

“One who fears the dark”

“And so he should,” said the entity, with satisfaction.

“Indeed. But I think you misunderstand. I am not here to keep darkness out. I’m here to keep it in.”

In Monstrous Regiment, Terry Pratchett explored the insanity of war. Here Pratchett shows us the insanity of hate, hate that even our hero Sam Vimes falls victim to. It’s a brilliant turn, showing that even the best of men can be consumed by hate if the circumstances are right. But far from fatalistic, Terry reminds us that there is an antidote: justice.